DT LOVE

[LATEST UPDATE]

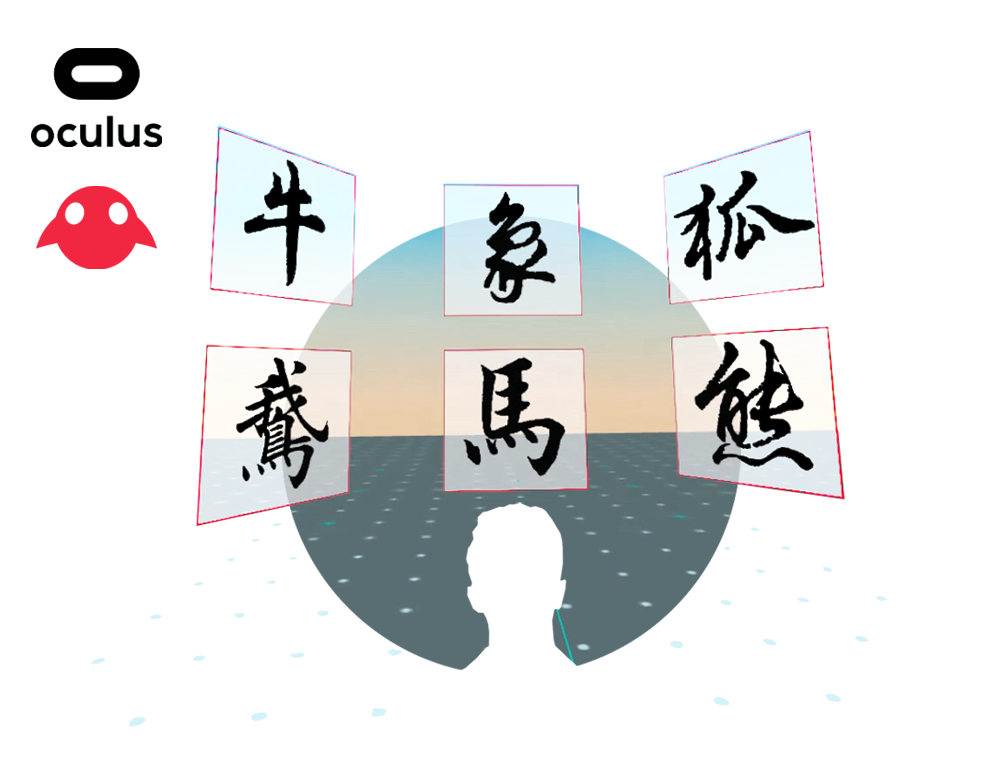

A VR application for individuals with Autism Spectrum Disorder (ASD)

- Topic: VR, autism

- Duration: April 2021 - Aug 2022

- Role: fullstack designer & developer, research assistant

- Prototyping Tools: Unity 3d, SketchUp for Architecture, Photoshop, Illustrator, Audacity

- Programming language & SDK: C#, Oculus integration, lumin SDK

- Platform: Oculus Quest, Magic Leap One

- Latest update: The initial testing result has presented at Florida Association for Behavior Analysis (FABA) Conference. The paper is titled: Comparing Skill Acquisition and Latency to a Response in Discrete Trial Training across Virtual Reality, Augmented Reality, and Standard Teaching Methods

BACKGROUND

DT LOVE is short for Discrete Trial Learning Over Virtual Environments. Originally it was a VR application aiming at “replicating” the training (proved to be effective for decades) for individuals with Autism spectrum disorder (ASD).

In Spring 2021, Dr. Anibal Gutierrez from the Department of Psychology proposed a new study, which objective was to evaluate a training simulation in both VR and AR context with non-ASD subjects. This study is the preparation for the subsequential evaluation with individuals with ASD.

PROJECT GAOL

- Based on the existing application, develop a VR and an AR application with a new set of learning objects, and a new training structure.

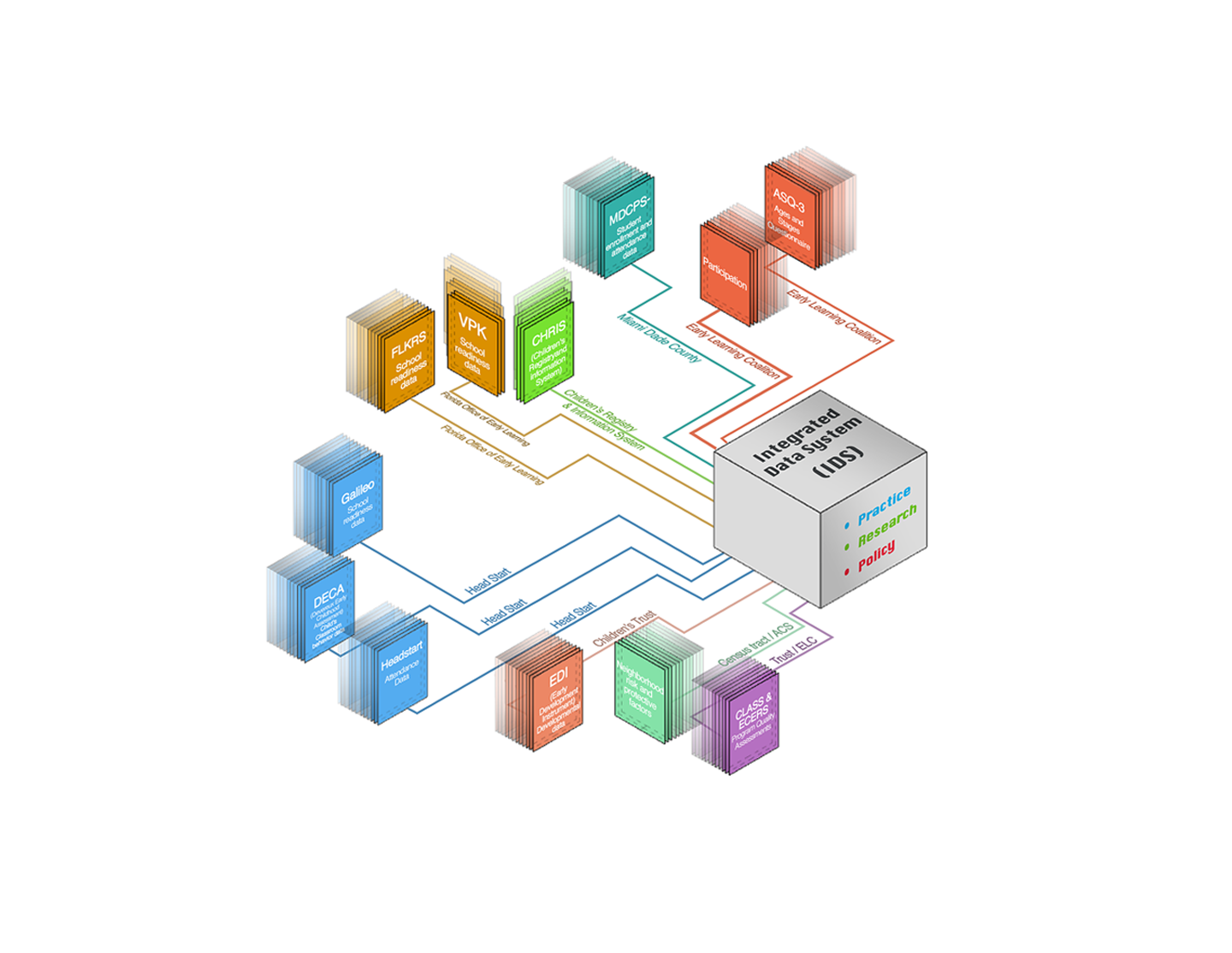

- Design and develop a user data-collecting component to access data of the user and the device in real-time and send it to a remote server.

process

The design and development of the new training simulation evolved with the iterations of the training structures from researchers in the Department of Psychology. Here I developed this documentation based on the current application.

Demo

Demo with Oculus Quest 1

Demo with Magic Leap One

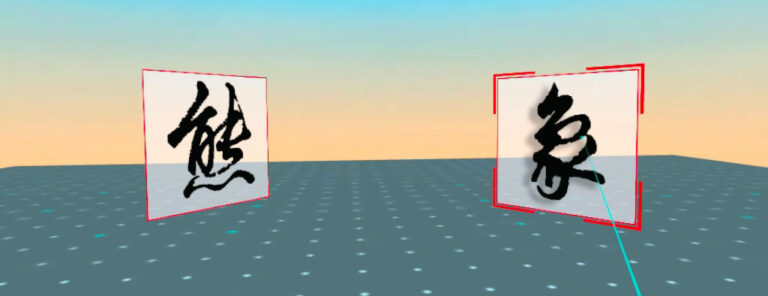

New Learning content

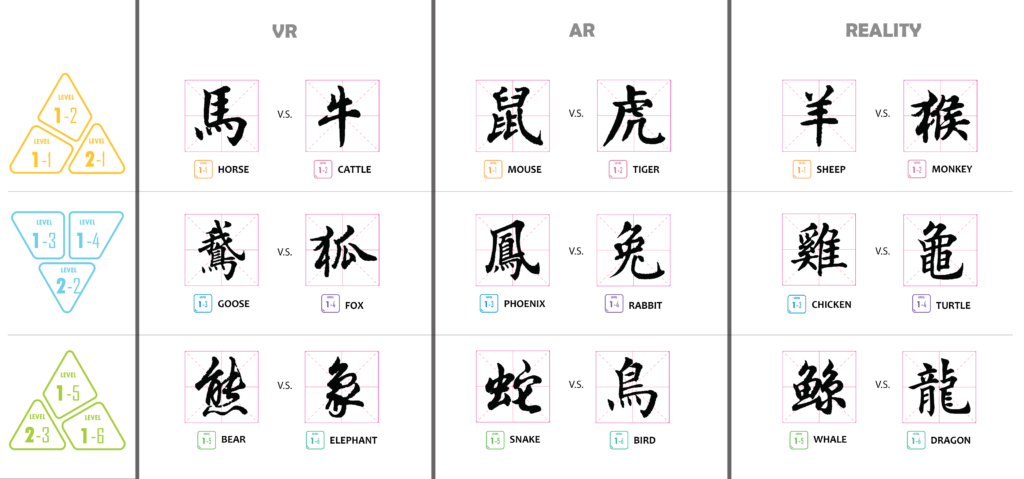

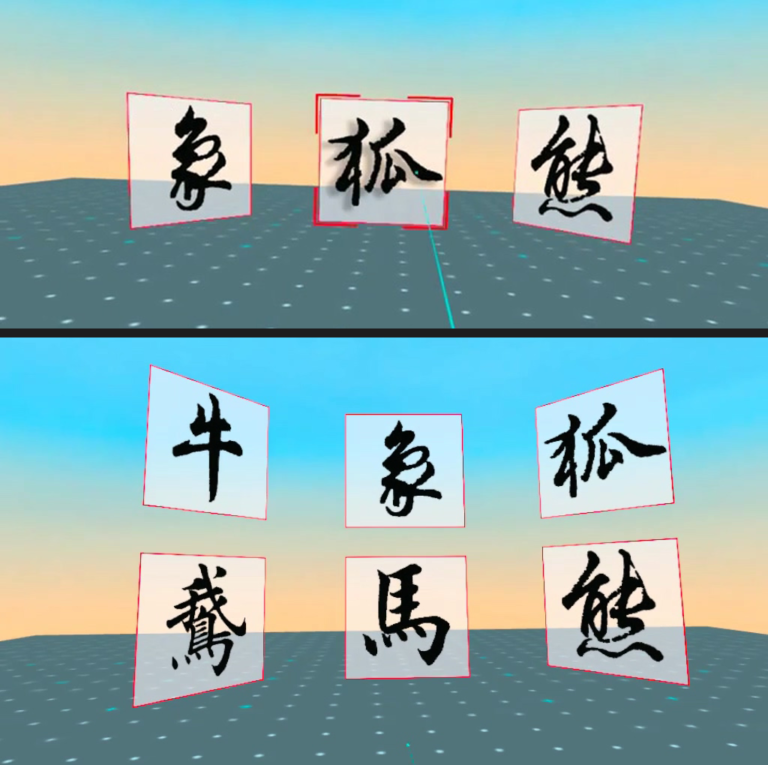

Instead of using 3d animals, researchers decided to use a new language as learning content across three different contexts: virtual reality, augmented reality, and “real” reality. The reason of using “new language” is that it can be conveniently replicated (in the form of cards) in all the three contexts.

So I chose three sets of Chinese characters as the new learning content.

new training structure

In this phase of application development, we added three new levels (with new training structures) to the application, which were “PRETEST”, “CONDITIONAL PRETEST”, “FIELDS OF X”. The training structure in “Level One” and “Level two” remains the same with the previous application (using 3d animal models as learning content).

PRETEST

- The criteria for passing a trial in Pretest: no criteria

- The criteria for passing Pretest: no criteria.

- Rules of selecting (by the system) a target character for each trial

- For each trial, one of the six target characters will be randomly selected.

- The same target character won’t be selected successively more than twice.

CONDITIONAL PRETEST

Conditional Pretest consists of three sections: Baseline Test, Training, and Post Test.

BASELINE TEST & POST TEST

- The criteria for passing a trial in Pretest: no criteria

- The criteria for passing Pretest: no criteria

- Rules of selecting (by the system) a target character for each trial: same as “PRETEST”

TRAINING

- The criteria for passing a trial in Pretest: trigger the target character without prompts (within 3s after characters appear).

The criteria for passing Pretest: trigger the target character without prompts for successively 3 times for all the three “pairs of characters” (“horse” (马) and “cattle” (牛), “goose” (鵝) and “fox” (狐), “bear” (熊) and “elephant” (象)).

Rules of selecting (by the system) a target character for each trial:

- Randomly select a “pair of characters” among the three pairs of characters.

- Given the pair of characters, randomly select target character within the pair.

- The same target character won’t be selected succesively more than twice.

- If both characters in the pair are selected correctly in time (within 3s) for succesively 3 times, move to the next pair of characters.

FIELDS OF X

Conditional Pretest consists of three sections: Baseline Test, Training, and Post Test.

BASELINE TEST & POST TEST

- The criteria for passing a trial in Pretest: no criteria

- The criteria for passing Pretest: no criteria

- Rules of selecting a target character (for testing) for each trial: same as “PRETEST”

TRAINING

- The criteria for passing a trial in Pretest: trigger the target character without prompts (within 3s after characters appear).

The criteria for passing Pretest: trigger the target character without prompts for successively 3 times for all the three “pairs of characters” (“horse” (马) and “cattle” (牛), “goose” (鵝) and “fox” (狐), “bear” (熊) and “elephant” (象)).

Rules of selecting (by the system) a target character for each trial:

- Randomly select a character among the six target character.

- The same target character won’t be selected successively more than twice.

- If the user doesn’t make the right response within a certain time frame (3s), the same target character will be selected again till the user gets it right.

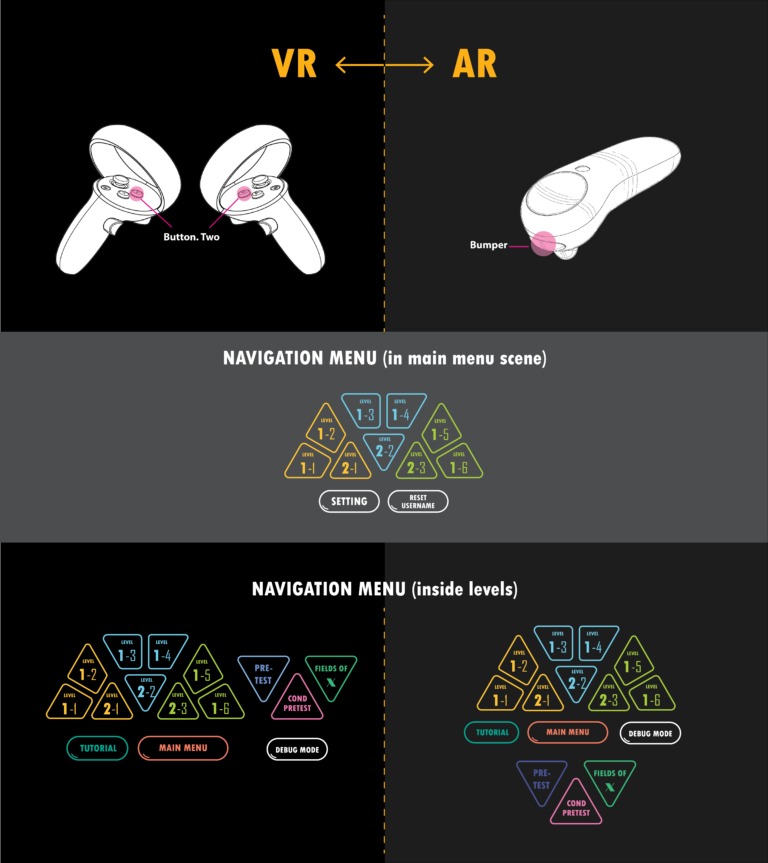

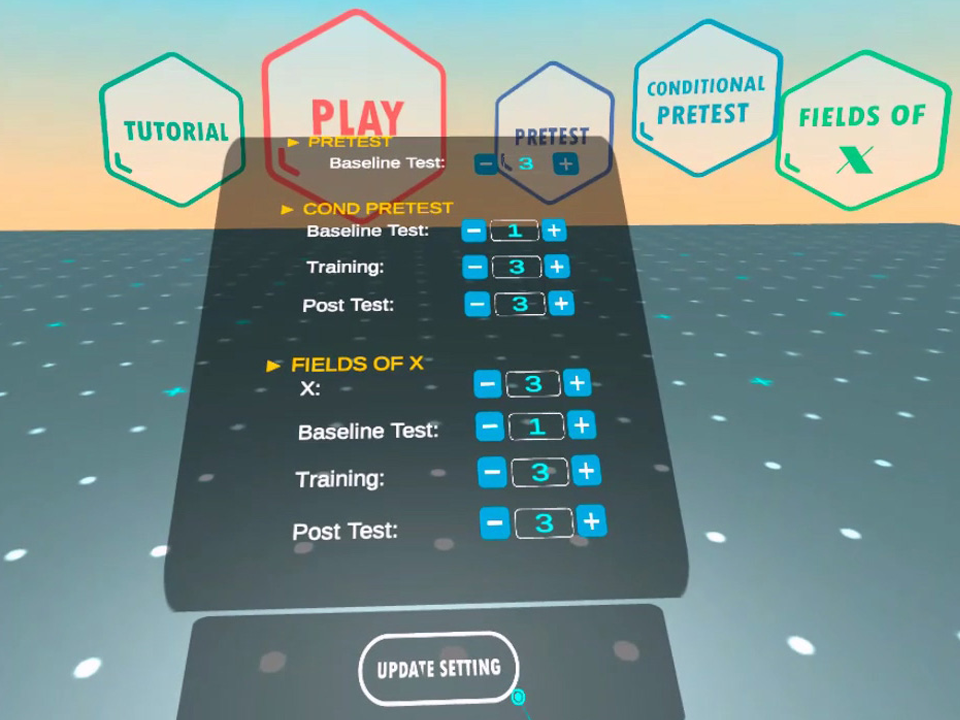

navigation component

Trigger a button on the controller to bring up the navigation panel to navigate among different levels.

data-collection component

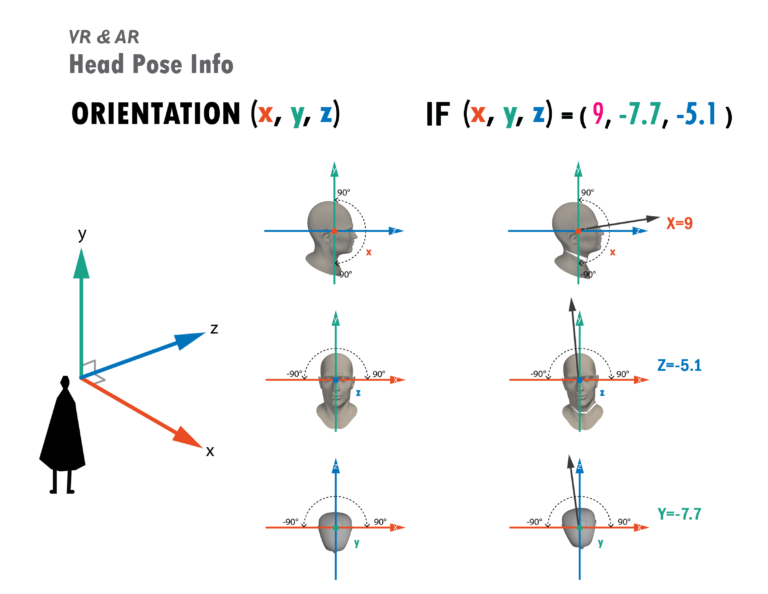

At the end of each trial, user data will be sent from the device and stored in a Google Spreadsheet.

The demanded user data is not always one-dimensional data, for example, the data of the user’s head orientation, which would be recorded as “(x, y, z)”. The infographic beneath presents the meaning of “x”, “y” and “z”.

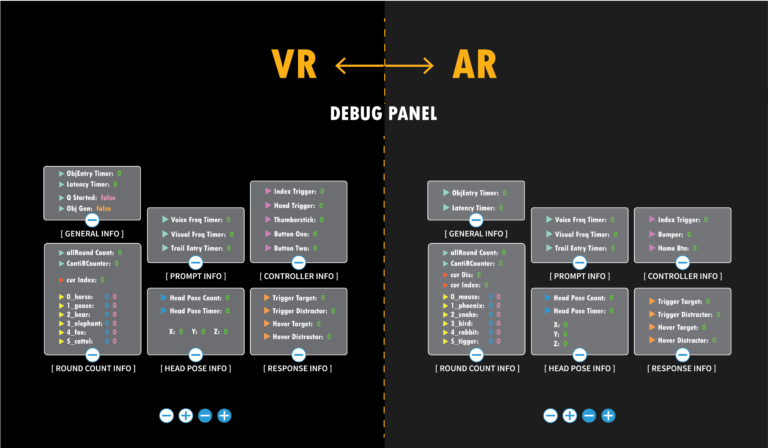

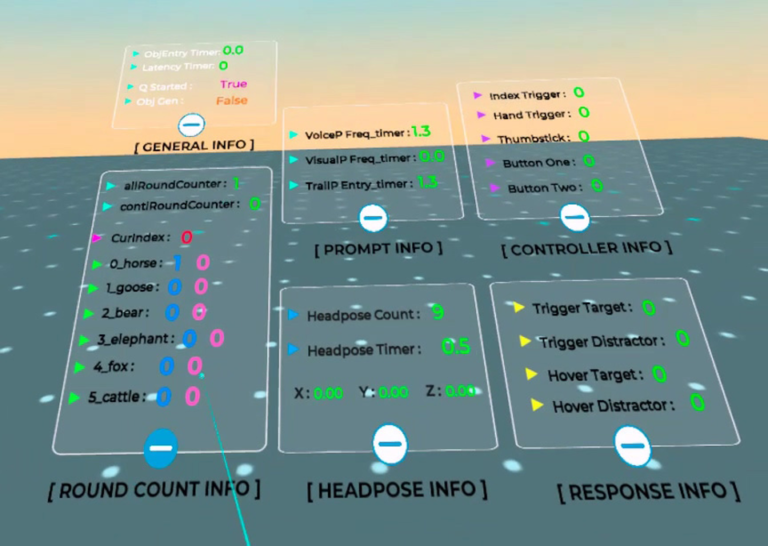

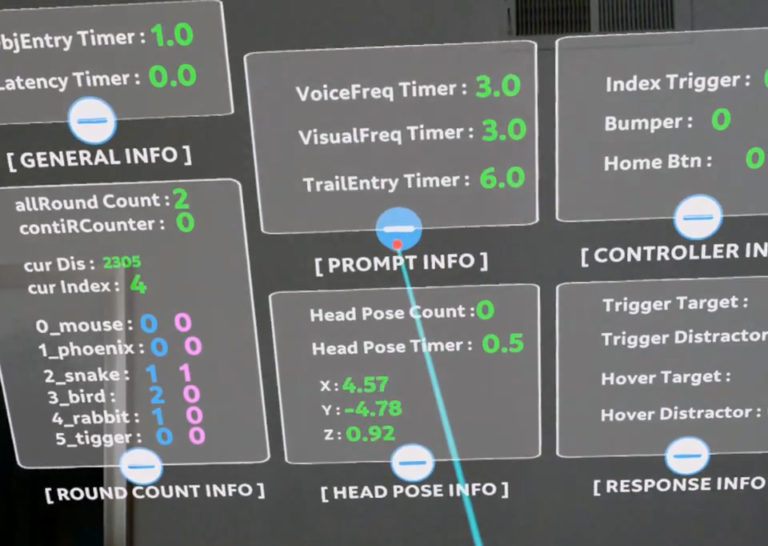

debug component

Because the platform of the application is head-mounted desplays (HMD), so it is hard to debug, which especially nessary for the data collection component. Therefore I created a debug component for each of the VR and AR version. It is a visual validation of the acuracy of user data that calculating by the device.

Researchers can open debug panel (from navigation panel) in all levels to see whether the timers in the application are functioning as demand.

other features

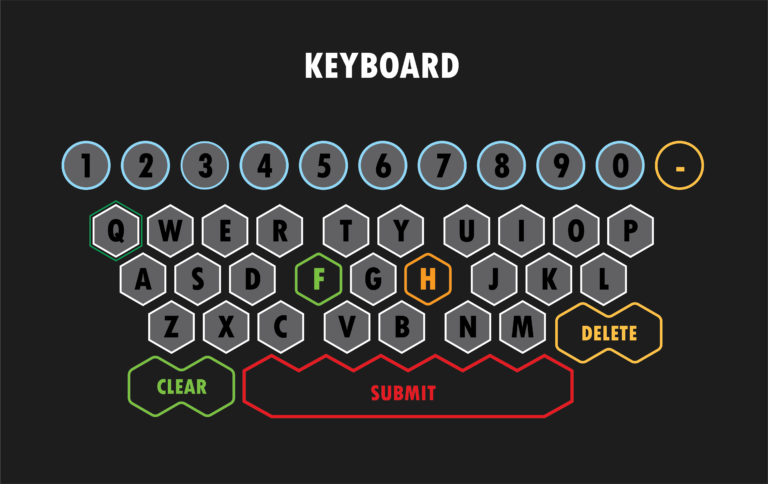

SETTING PLAYER NAME

In order to identify the data of different users, the user can use keyboard to set player name in “Main menu scene”. So when researchers check user data in Google spreadsheet, data from each trial will have “Player Name” as one data point.

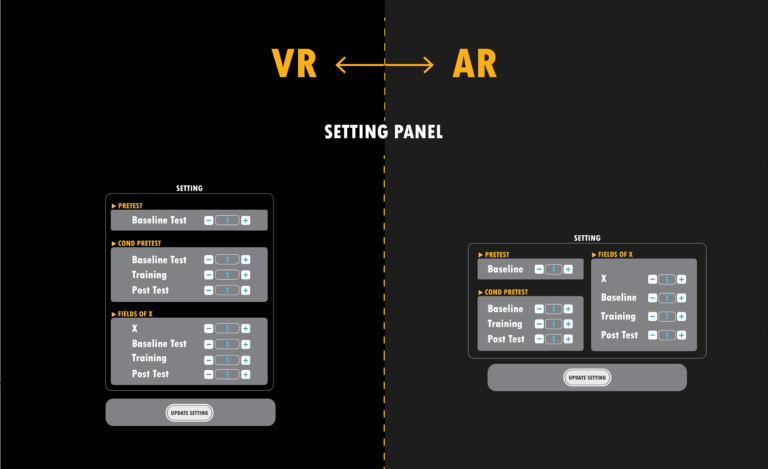

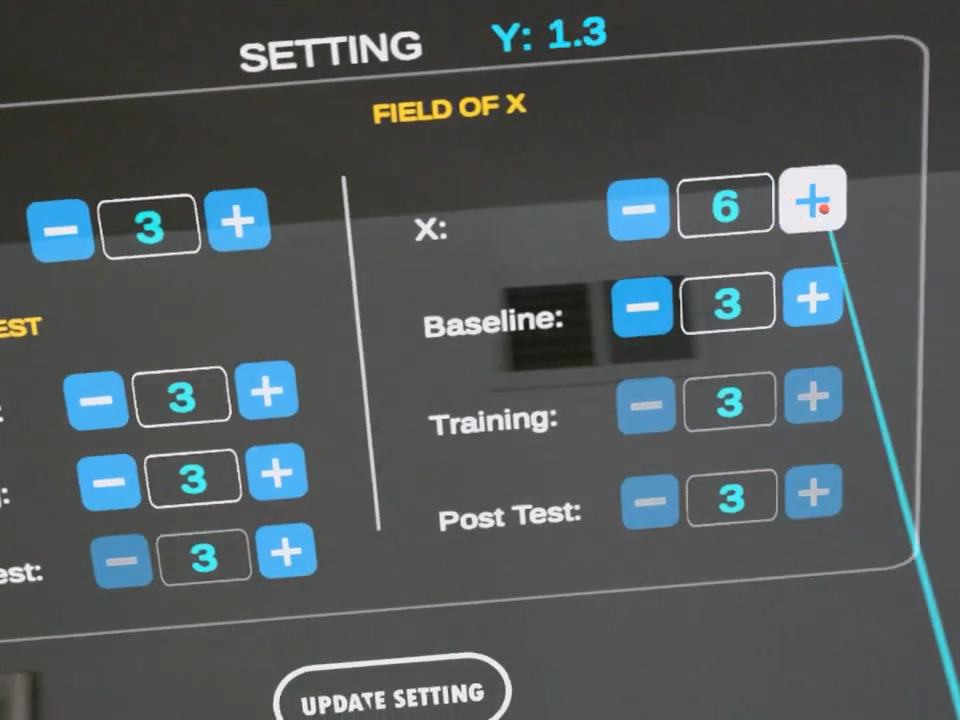

CUSTOMIZE PARAMETERS

Researchers can use setting panel to change the “passing critira” in each section of “PRETEST”, “CONDITIONAL PRETEST”, and “FIELDS OF X”, can customize the number (“X”) of characters in “FIELDS OF X”.

This feature can allow researchers test through various scenarios without a developer.

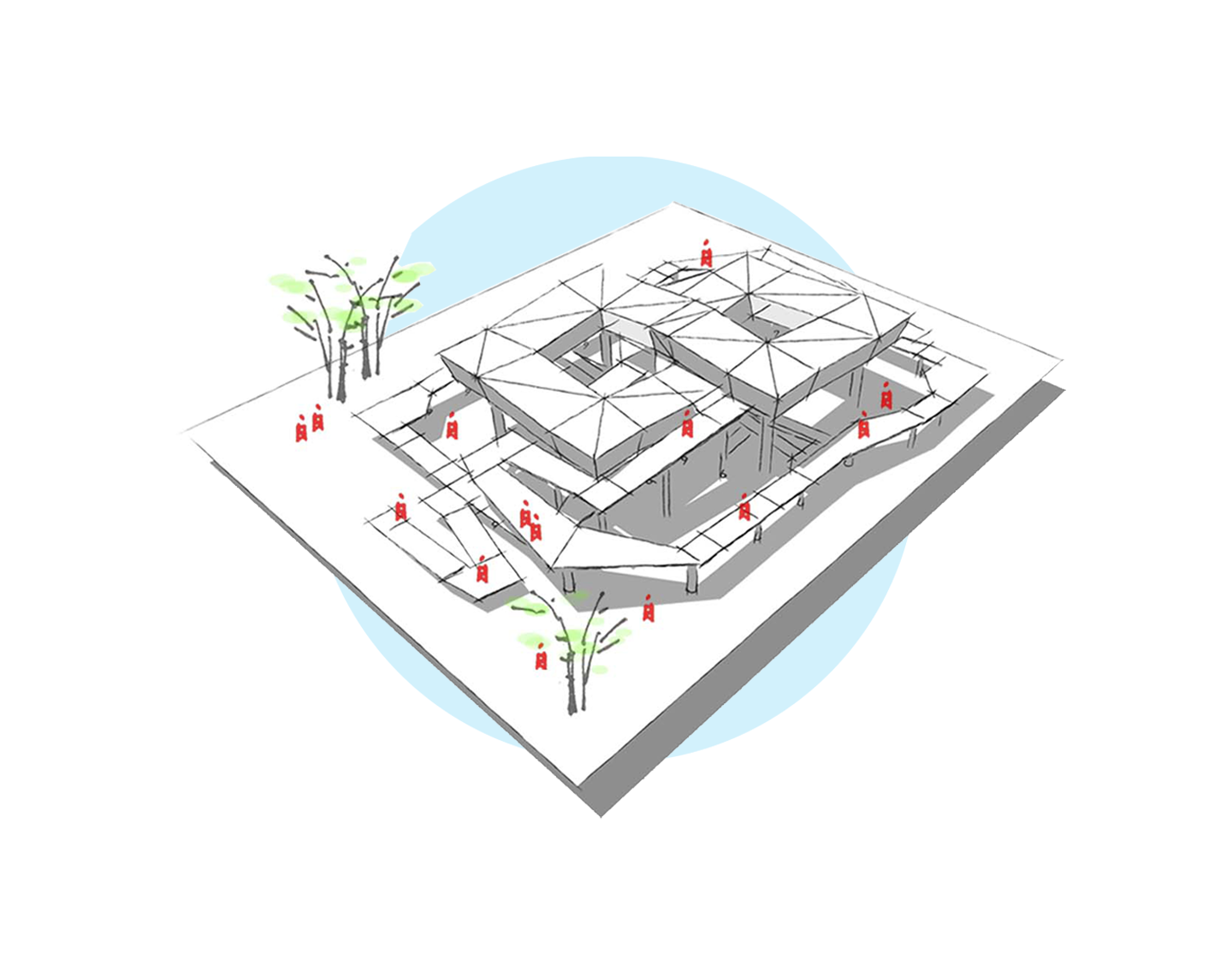

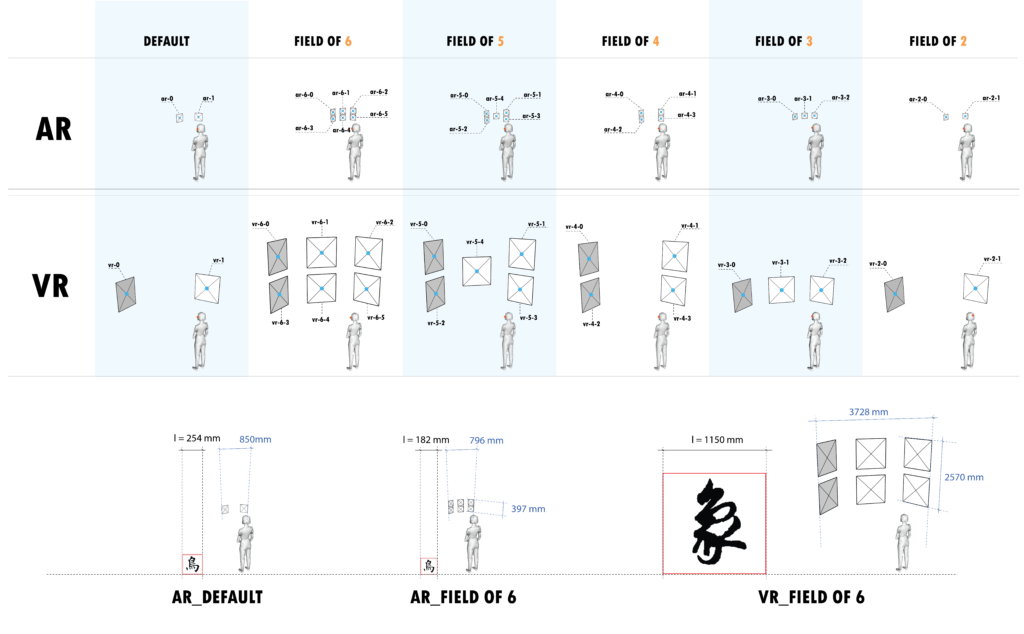

spatial layout

For the Field of View (FOV) of Oculus Quest (1 & 2) and Magic Leap One are very different, the spatial layout and the scare of elements in the scene become significant.

- FOV of Oculus (1 & 2): horizontal FOV is 104 deg (+- 4); vertical FOV is 89 deg (+- 4)

- FOV of Magic Leap One: horizontal FOV is 40 deg; vertical FOV is 30 deg

next step

Explore spatial impact to research result (for VR version)

- Implement “passthrough” feature to the project. Oculus Quest 2 has a new feature that can allow users enter an “AR mode” which is “video see-through” instead of “optical see-through” like Magic Leap One. It may be interesting to have a button in the VR version to toggle this “AR mode”.

- Add other environmental elements (like trees). Using a button to toggle different environmental settings.

- Implement a feature that can change the viewing angle of players.

Explore auditory impact to research result (for VR version)

- Add a button to toggle ambient sound.